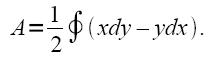

This activity explores the properties of the Fourier transform

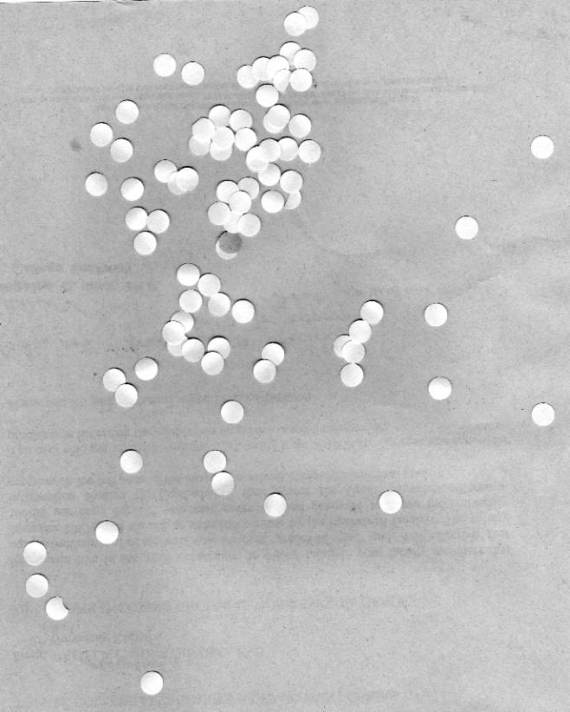

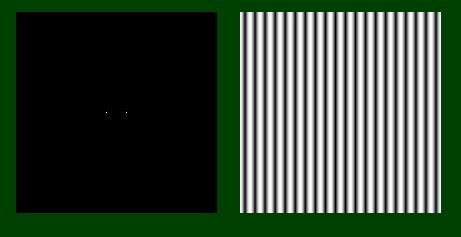

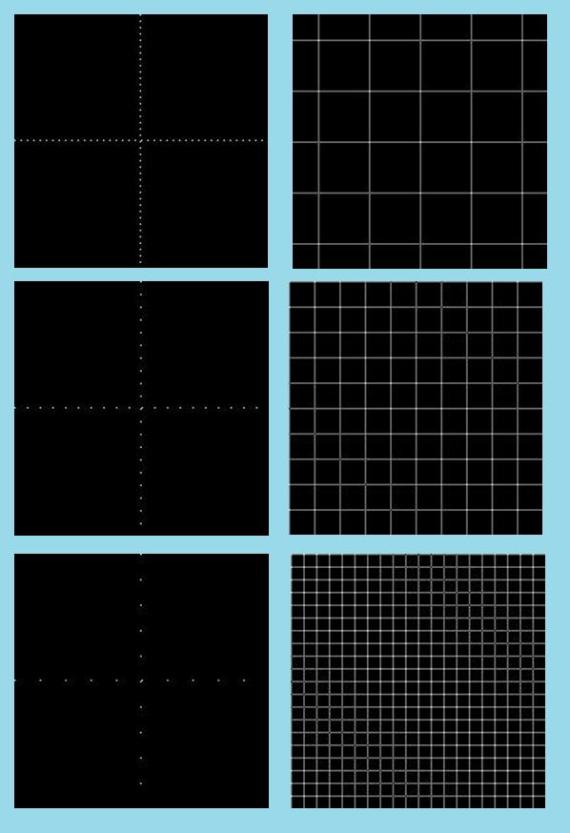

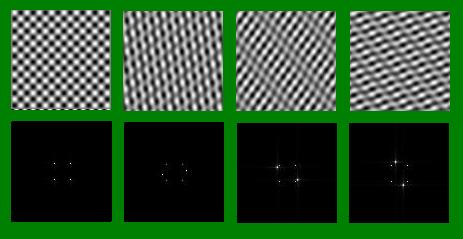

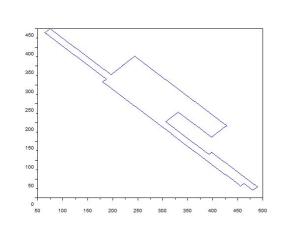

The first part involves mainly the familiarization with basic Scilab commands for Fourier transform like fft2 and fftshift. Two images were observed for this part one being a circle and the other is the letter A. After calling the image via ‘imread’ fft2 was then used to obtain the Fourier transform of the images. The use of fft2 resulted into a matrix containing complex numbers which cannot be used to create images to visualize the result so the abs() command was also invoked to obtain the absolute values of the matrix elements. The fftshift on the othe

r hand works by taking the matrix element with highest value and shifting them towards the center. The images obtained for the whole process is presented in figure 1.

Figure 1. Applying the fast fourier transform command on images. From left to right: grayscale of the original image, invoking fft2, applying fftshift, applying fft2 for the second time.

Due to the scale and low resolution of the image, the results are not so clear. However, it can easily be said that the fourier transform of a fourier transform will result to the original state but in an inverted position.

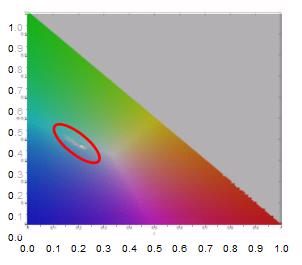

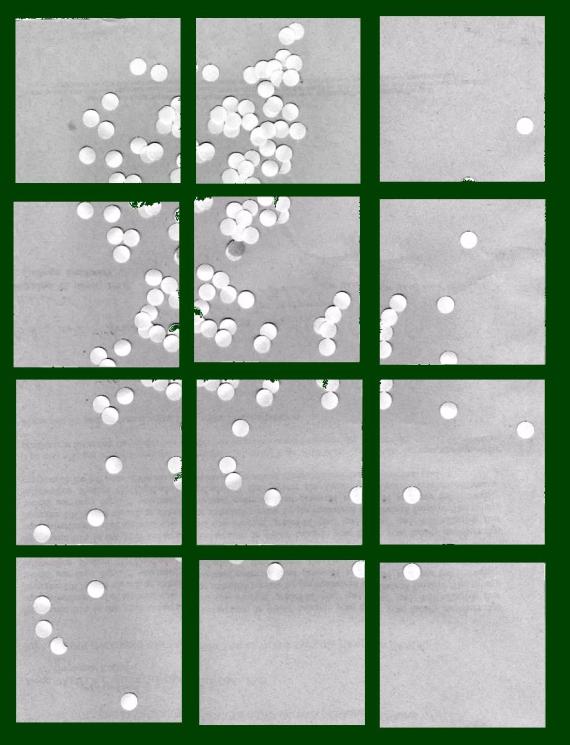

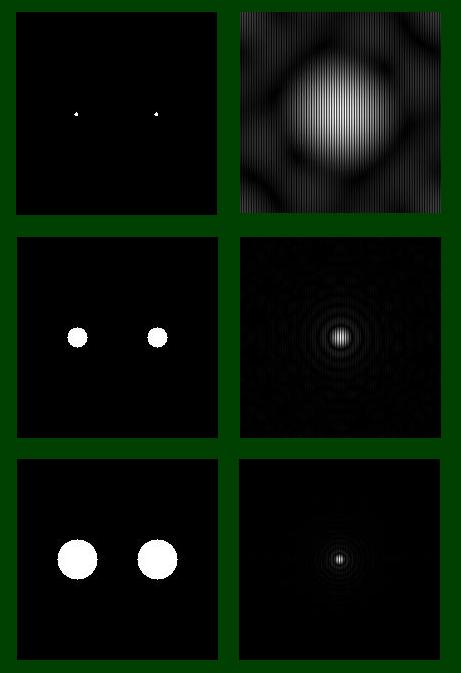

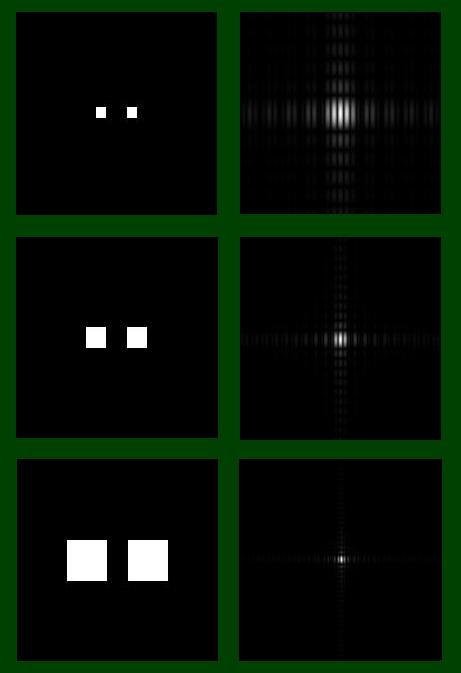

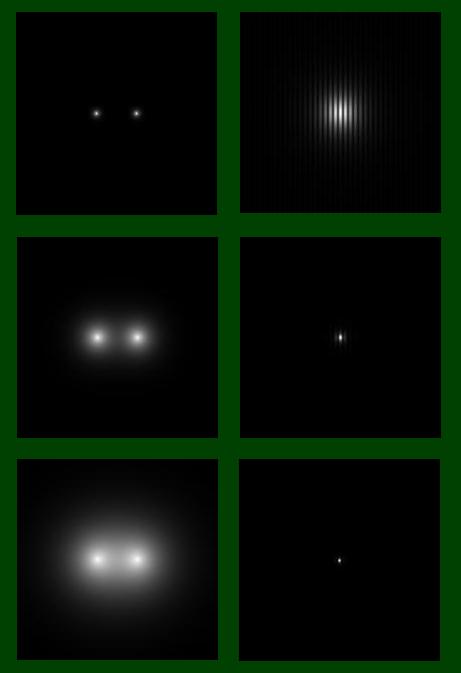

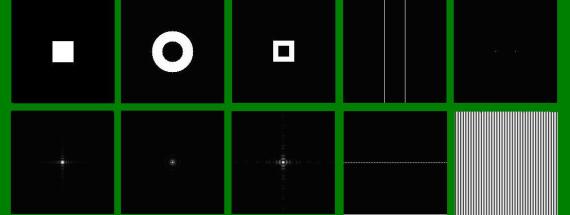

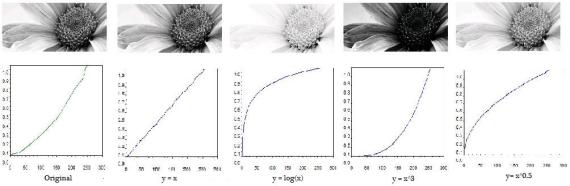

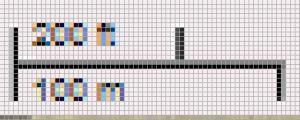

Next analysis was done on the convolution of two images. In particular, a simulation of an imaging device was done. 2 128X128 pixels image was used, both having a black background. The image with a white ‘VIP’ text will our image of interest while a white circle in will serve as our aperture. The two images was convolved by getting the product of their individual fft’s following the rule that if h=f*g then H=FG where here, (*) represents convolution, the small letters are functions while the capital letters are fourier transform of their respective smaller letter. It was observed that the clarity of the resultant image is dependent upon the radius of the aperture, i.e., the resulting image blurs out as the radius of the aperture decreases. In figure 2 we can see two set of images. The left most images for each set is the aperture and the right most are the result.

Figure 2. Simulation of imaging device.

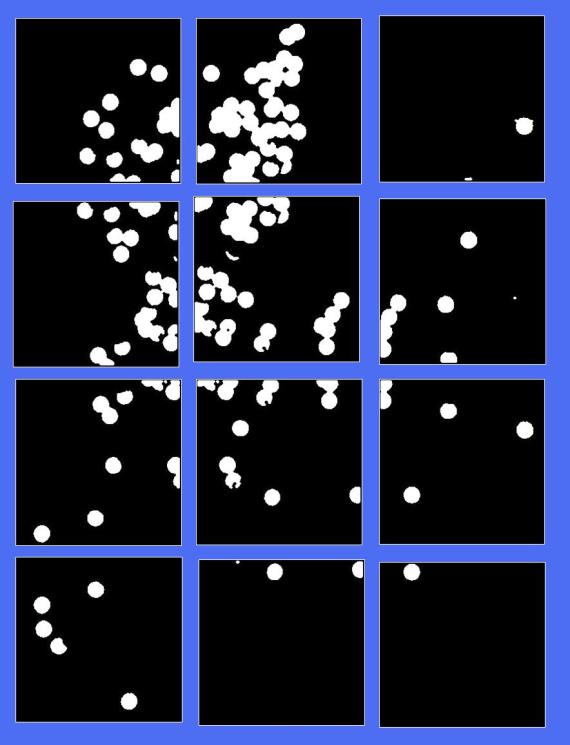

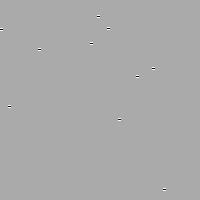

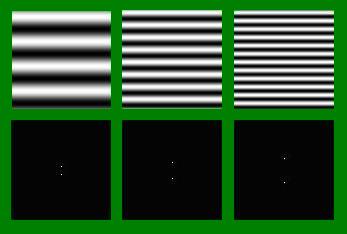

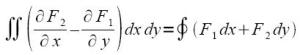

The third part of the activity uses fast fourier transform to obtain the correlation of two images. Correlation is a measure of similarity between two functions. This measure can be related to fourier transform such that if p is the correlation between functions f and g then P, F, and G, the fourier transforms of the functions denoted by their corresponding small letter, is given by the equation P=F*G where (*) indicates complex conjugate.

For this, we compared an image containing the phrase ‘THE RAIN IN SPAIN STAYS MAINLY IN THE PLAIN’ and an image containing the letter ‘A” of the same font. The images and their correlation can be seen in figure 3. Five bright spots can be seen in the resultant image, the same number of A’s found in the first image, these indicate the spots with highest correlation with the second image.

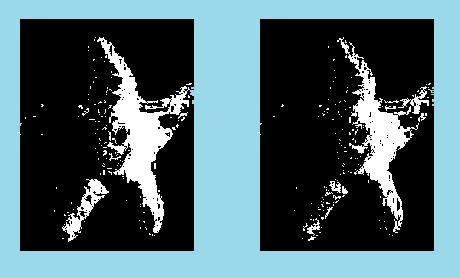

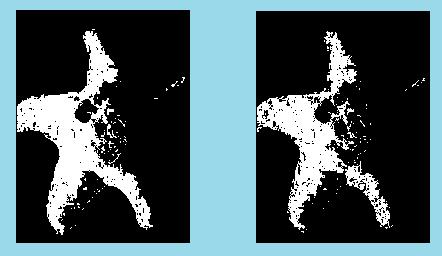

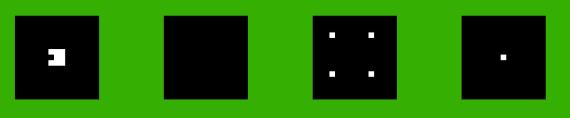

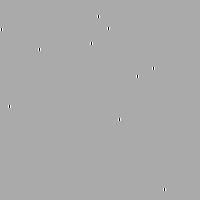

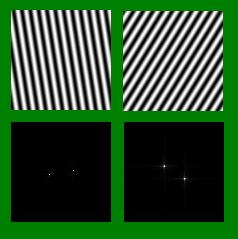

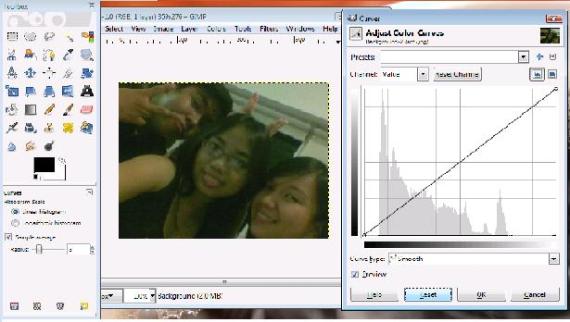

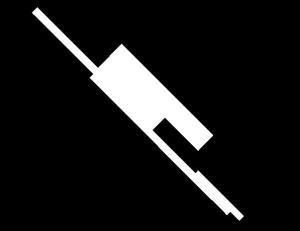

Finally, we were asked to do an edge detection using convolution integral. Here, we used the same ‘VIP’ image used for part two and convolved it with different 3×3 matrix patterns whose total sum is zero. I used the following matrices for my pattern:

pattern1= [-1 -1 -1; 2 2 2; -1 -1 -1];

pattern2= [-1 2 -1; -1 2 -1; -1 2 -1];

pattern3= [-1 -1 -1; -1 8 -1; -1 -1 -1];

pattern4 = [1 1 1; 1 -8 1; 1 1 1];

Comparing the results for patterns 1 and 2 (top 2 images in figure 4) it could be seen that the horizontal edges of the letter is more defined for pattern 1 while the vertical edges is more defined for pattern 2 which are comparable to the position of the number ‘2’ in their respective patterns. Comparing results for patterns 3 and 4 (bottom 2 images in figure 4), it is noticeable that the dark outline of the letters for pattern 3 and 4 is at different positions. For pattern 3 the dark outline can be seen after the white outline while for pattern 4 it is the other way around. For both cases, however, the position of the outline corresponds to the position of the negative sign in the matrix patterns.

I will give myself a grade of 9 for being able to simulate what is asked.

I would like to thank Tisza, and Jonats from whom I confirmed if I was getting the right results

Sources:

[1] Dr. Maricor Soriano. “A6 – Fourier Transform Model of Image

Formation”, 2010

Scilab codes:

—//6A. circle

//x = [-1:0.01:1];

//[X,Y] = meshgrid(x);

//r = sqrt(X.^2 + Y.^2);

//circle = zeros(size(X,1), size(X,2));

//circle(find (r <=0.5)) = 1.0;

//subplot(1,5,1), imshow(circle,[]);

//Igray = im2gray(circle);

//FIgray = fft2(Igray); //remember, FIgray is complex

I = imread(‘D:\files\kaye\186\circle_6_3.bmp’);

//gray = im2gray(I);

//FIgray = fft2(Igray); //remember, FIgray is complex

//subplot(1,5,1), imshow(I, []);

//subplot(1,5,2), imshow(abs(FIgray),[]);

//subplot(1,5,3), imshow(fftshift(abs(FIgray)), []);

//subplot(1,5,4), imshow(abs(fft2(FIgray)),[]);

//subplot(1,5,5), imshow(abs(fft2(fft2(I))),[]);

//6A. letter A

//I= imread(‘D:\files\kaye\186\letterA.bmp’);

//image=(I-min(I))/(max(I)-min(I));

//subplot(1,5,1), imshow(image);

//Igray = im2gray(image);

//FIgray = fft2(Igray); //remember, FIgray is complex

//subplot(1,5,2), imshow(abs(FIgray),[]);

//subplot(1,5,3), imshow(fftshift(abs(FIgray)), []);

//subplot(1,5,4), imshow(abs(fft2(FIgray)));

//subplot(1,5,5), imshow(abs(fft2(fft2(image))));

//6B. convolution

//rgray = im2gray(I);

//image= imread(‘D:\files\kaye\186\VIP.bmp’);

//agray = im2gray(image);

//Fr = fftshift(rgray);

//aperture is already in the Fourier Plane andneed not be FFT’ed

//Fa = fft2(agray);

//FRA = Fr.*(Fa);

//IRA = fft2(FRA); //inverse FFT

//FImage = abs(IRA);

//final = (FImage-min(FImage))/(max(FImage)-min(FImage));

//imshow(final);

//imwrite(final, ‘D:\files\kaye\186\vip_mid_aperture.jpg’)

//6C. Template matching using correlation

//text = imread(‘D:\files\kaye\186\text.bmp’);

//text_gray= im2gray(text);

//a = imread(‘D:\files\kaye\186\a.bmp’);

//a_gray = im2gray(a);

//ftext = fft2(text_gray);

//fa = fft2(a_gray);

//im = fa.*(conj(ftext));

//FImage = fft2(im);

//FImage= abs(FImage);

//imshow(FImage, []);

//final = (FImage-min(FImage))/(max(FImage)-min(FImage));

//imwrite(final, ‘D:\files\kaye\186\correalation.jpg’);

//6D. Edge detection using the convolution integral

pattern1= [-1 -1 -1; 2 2 2; -1 -1 -1];

pattern2= [-1 2 -1; -1 2 -1; -1 2 -1];

pattern3= [-1 -1 -1; -1 8 -1; -1 -1 -1];

pattern4 = [1 1 1; 1 -8 1; 1 1 1];

pattern5 = [-3 -3 -3; 2 2 2; 1 1 1];

image= imread(‘D:\files\kaye\186\VIP.bmp’);

gray = im2gray(image);

result = imcorrcoef(gray, pattern1);

result = (result – min(result))/(max(result)/min(result));

imshow(result);

imwrite(result, ‘D:\files\kaye\186\6D_1.jpg’);

//repeat for all pattern

note: remove ‘//’ to un-comment

—