Fourier transform (2D): mathematical representation of an image as a series of two-dimensional sine waves.

-http://www.wadsworth.org/spider_doc/spider/docs/glossary.html

This activity deals with the properties of 2D Fourier Transform.

Familiarization with FT of Different 2D Patterns

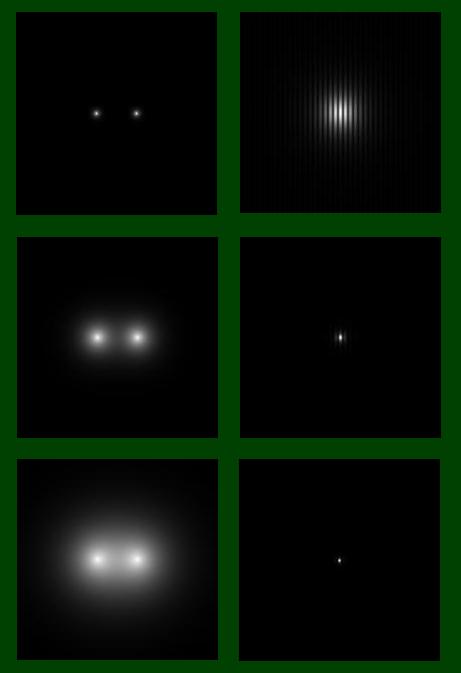

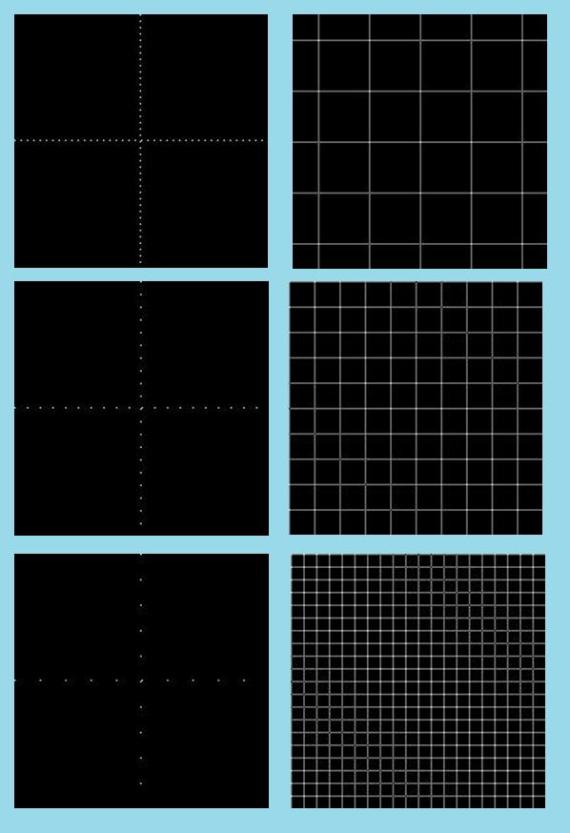

For the first part of this activity, we were to familiarize ourseleves with Fourier Transform by applying it to several 2D patterns namely:

-square

-annulus

-square annulus

-2 slits

-2 dots

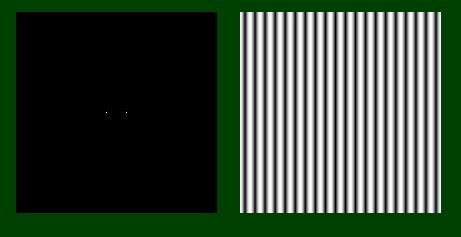

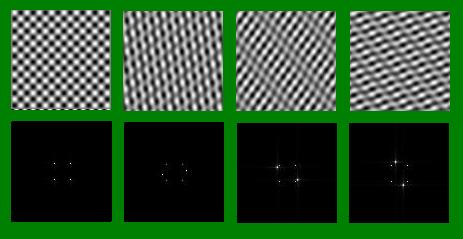

The resulting Fourier Transforms are shown below:

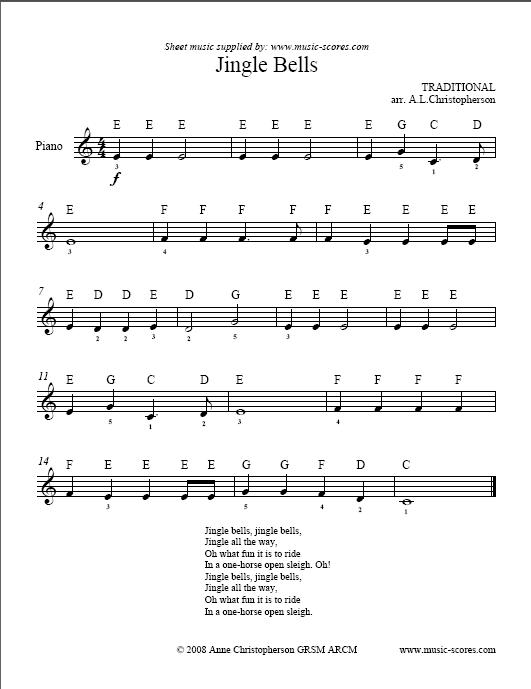

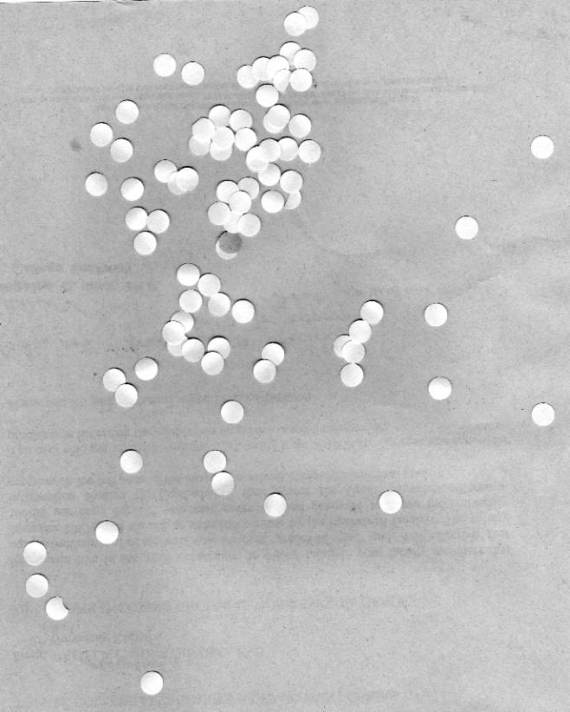

Figure 1. 2D patterns (top) and their fourier transform (bottom)

Anamorphic Property of the Fourier Transform

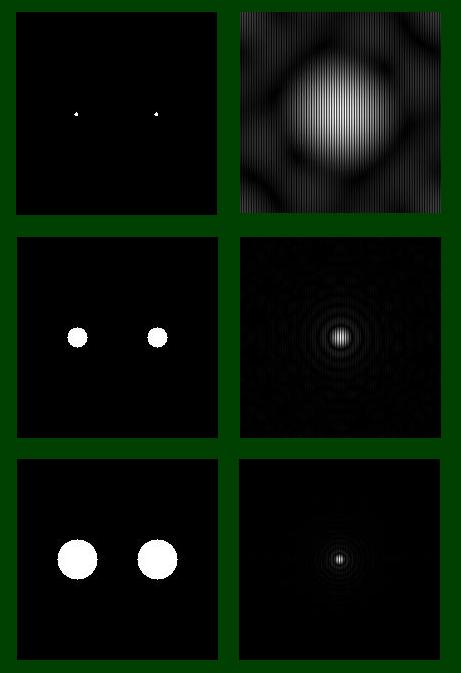

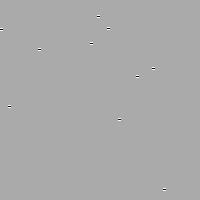

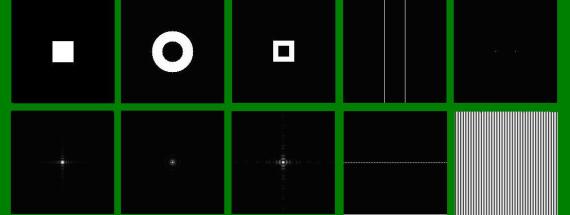

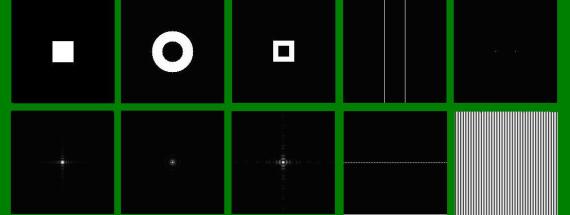

Here we investigate on how Fourier Transform varies with certain parameters in the patters. In particular, we want to know how the FT of a 2D sinusoid changes with varying frequency and rotation.

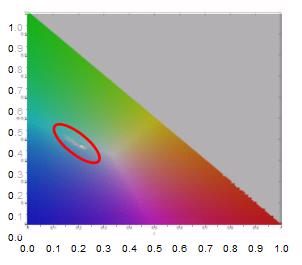

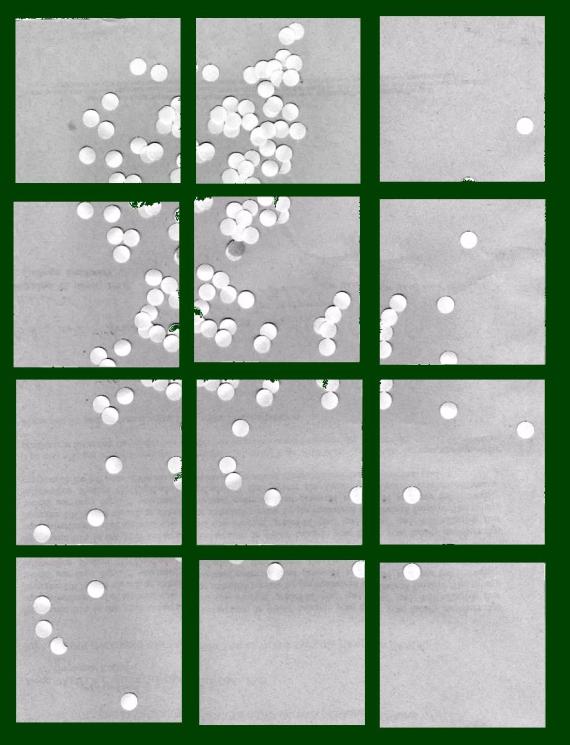

From the image below we could see that as we increase the frequency of the sinusoid the resulting FT of the pattern, 2 dots symmetric about the x axis, also increases in separation.

Figure 2.Top images are 2D sinusoids of increasing frequencies. Bottom images are their fourier transforms.

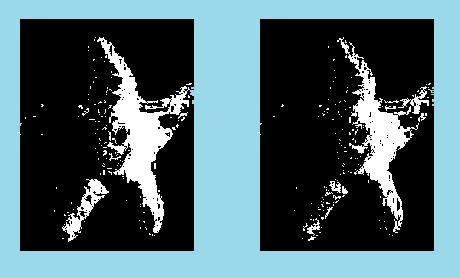

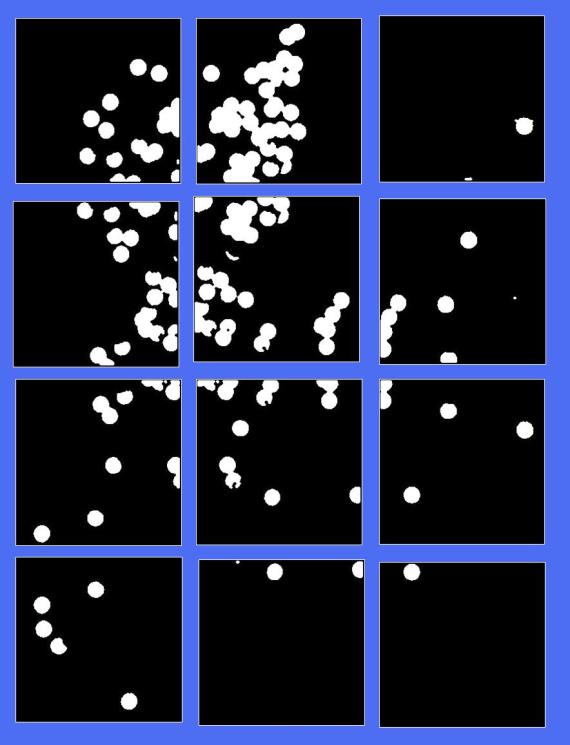

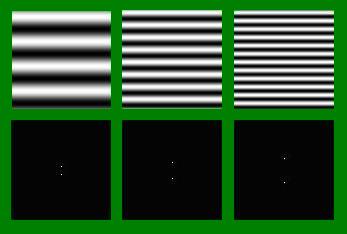

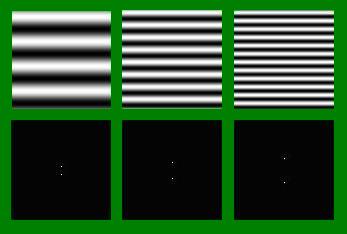

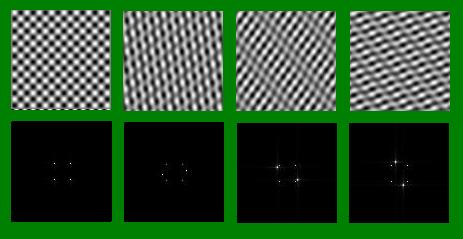

Rotating the sinusoids also produced a somewhat reverse effect to its FT, that is with respect to sinusoid that is parallel with the x-axis. In my example shown below, I rotated the sinusoids by 30 degrees and 45 degrees. Applying FT on the images resulted to 2 dots ‘rotated’ by approximately the same amount but in the other direction. (I know ‘rotated’ is not the proper term but I hope you get what I mean).

Figure 3. Rotated sinusoids (top) and their respective Fourier Transforms (bottom)

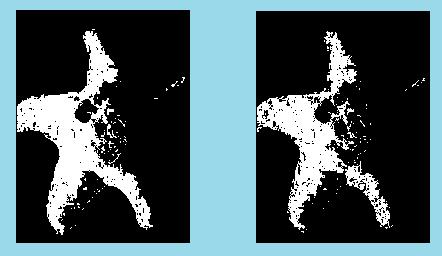

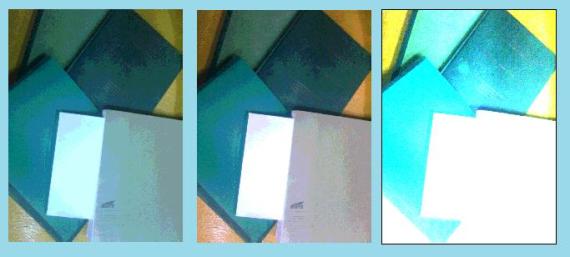

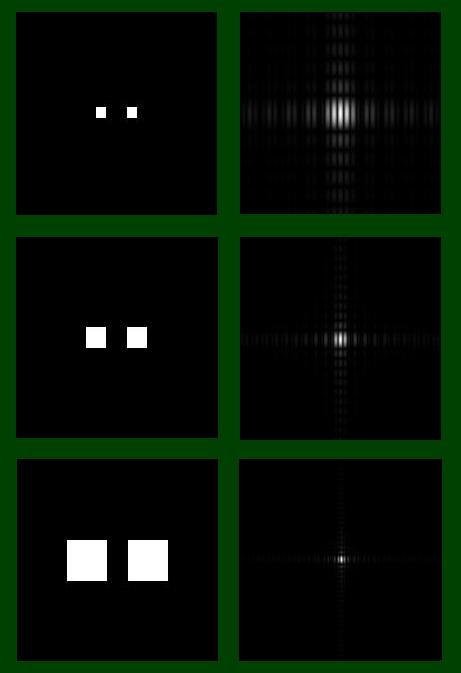

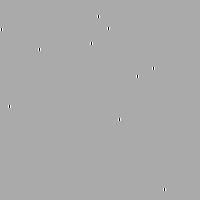

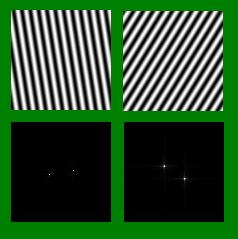

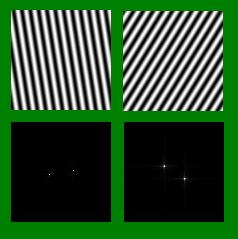

Finally, we look at how FT applies to combination of different patterns. Below we see a series of combination of rotated sinusoids. Our base pattern is the first image shown which is a combination of 2 2D sinusoids, one parallel to the x-axias and another to the y-axis. We then add a third sinusoid rotated at different angles.

Figure 4. Combination of rotated sinusoids (top) and their fourier transforms (bottom)

Comparing their FT’s we could see that 4 dots seem to consistently appear in all four images while for the last three patterns two additional dots appeared which behaves comparable to that of the FT’s of the rotated sinusoids shown in Figure 3. The FT’s of the combined patterns seem to be a combination of the FT’s of their respective constituent patterns.

I would like to thank Mr. Entac and Mr. Abat for sharing their insights about this activity.

Grade: 9/10